Our new paper entitled, “Analysis of Q-learning with Adaptation and Momentum Restart for Gradient Descent”, has recently been accepted by the 29th International Joint Conference on Artificial Intelligence (IJCAI), 2020.

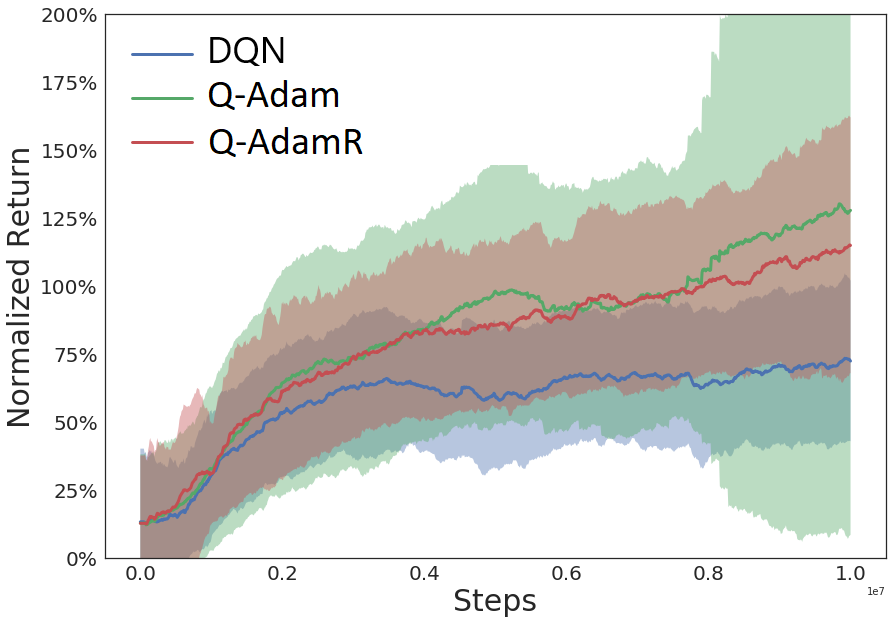

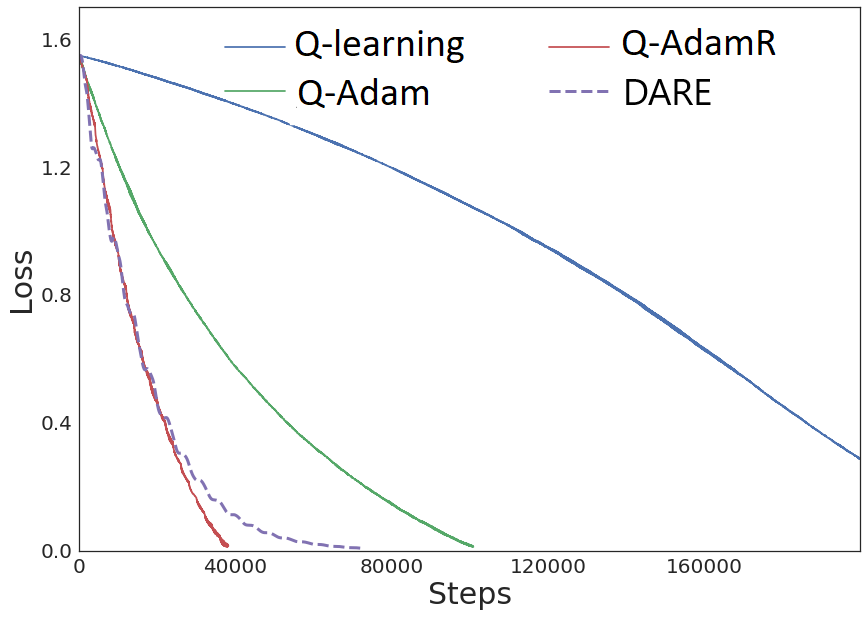

Existing convergence analyses of Q-learning mostly focus on the vanilla stochastic gradient descent (SGD) type of updates. Despite the Adaptive Moment Estimation (Adam) has been commonly used for practical Q-learning algorithms, there has not been any convergence guarantee provided for Q-learning with such type of updates. In this paper, we first characterize the convergence rate for QAMSGrad, which is the Q-learning algorithm with AMSGrad update (a commonly adopted alternative of Adam for theoretical analysis). To further improve the performance, we propose to incorporate the momentum restart scheme to Q-AMSGrad, resulting in the so-called Q-AMSGradR algorithm. The convergence rate of Q-AMSGradR is also established. Our experiments on a linear quadratic regulator problem show that the two proposed Q-learning algorithms outperform the vanilla Q-learning with SGD updates. The two algorithms also exhibit significantly better performance than the DQN learning method over a batch of Atari 2600 games.

Left fig: Atari game experiment with performance normalized and averaged over 23 games; Right fig: LQR experiments with performance evaluated in terms of policy loss