Research

Reinforcement Learning

Reinforcement learning is essentially a simulation-based approach in obtaining an approximate solution to an optimal control/Markov decision problem. Due to the popularity of deep learning, there has been a growing interest in using deep neural networks to solve RL problems. This has led to a big success in RL, especially in playing various Atari games. However, existing methods are often purely algorithmic. When applied to complex physical systems, they often suffer from issues like poor sampling complexity, bad local minima, and lack of physical …

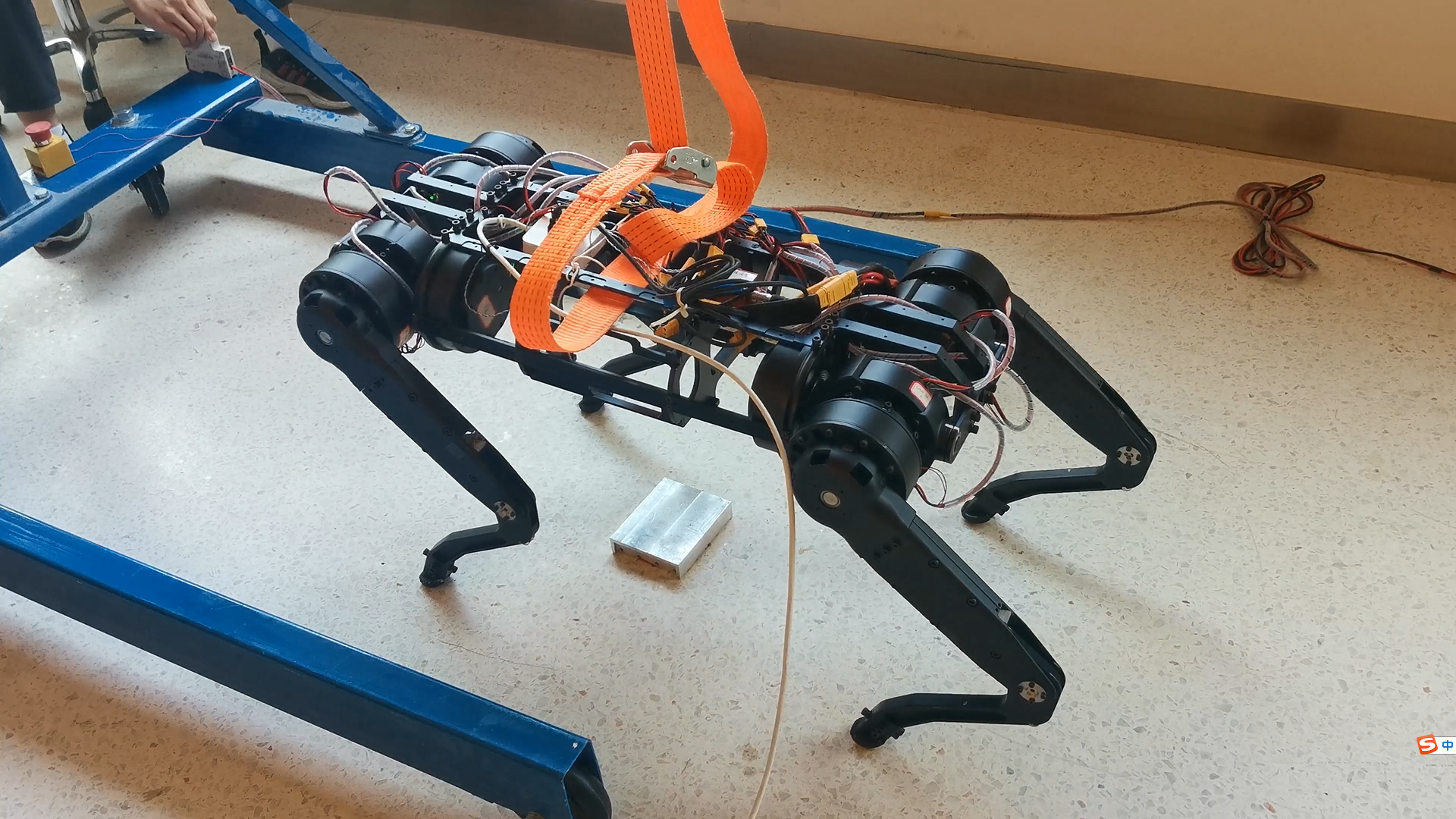

Legged Locomotion

Legged robot represents one of the most challenging robotic systems with rather complex dynamics. Although there are many cool videos about legged robots, a principled way to design a legged robot system does not exist in the literature, especially for dynamic locomotion. Numerous engineering tricks are needed to produce a cool demo, which usually only work in very specific scenarios. We are interested in advancing the “science” for legged robot and aim to develop new theoretic and algorithmic tools to enable more formal/systematic solution …

Dynamic Manipulation

Manipulation is one of the most classical problems in robotics. Recently, the field has experienced a considerable upward momentum with tremendous interest from industry (e.g. manufacturing, logistics). This is partly due to the recent progress in computer vision and deep learning which have enabled new application scenarios. Existing applications are mostly about pick and place. Our lab is interested in more challenging dynamic manipulation problems where dynamics, motion planning, and control play a very important role. Existing software tools such as OpenRAVE, MoveIt!, …

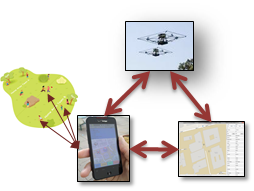

Multi-Robot Systems

We also study challenging decision and control problems in multi-robot systems. In this area, we mainly consider two classes of problems which can be viewed as dual to each other. One problem is on cooperative pursuit, namely, developing control strategies for a team of agents to capture an evader.u00a0This is a classic problem in robotics with rich applications in surveillance, search and rescue, battle field automation, etc. It is essentially a multiagent adversarial game whose optimal solution is intractable due to the curse …