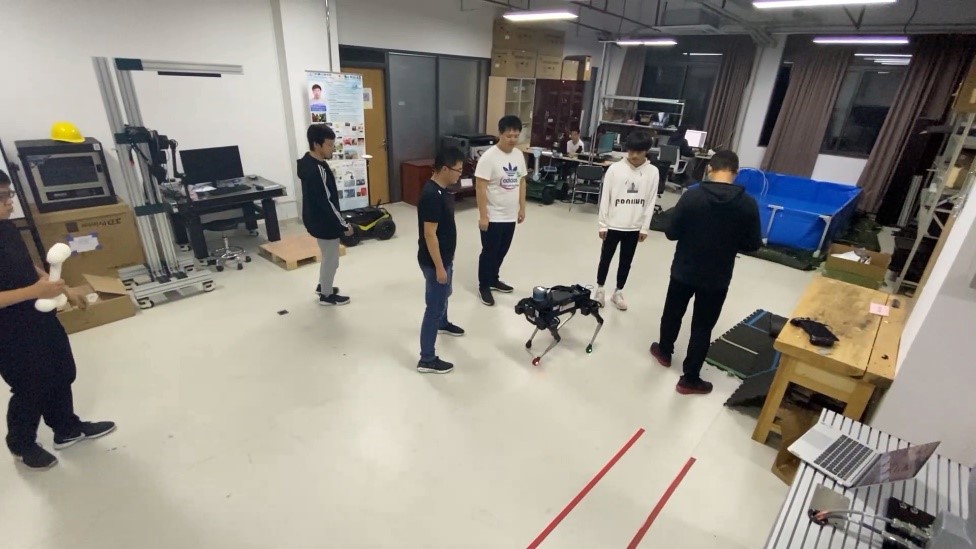

To enable quadruped robot to autonomously navigate through complex environment with dynamic obstacles, we have recently developed and validated two types of collision avoidance policies. The first type of policy is represented by a deep neural network trained with typical deep reinforcement learning algorithms (e.g. PPO). The input to the network is the raw Lidar data and the output of the network is the motion control command for the quadruped. The second type of policy uses only 2D Lidar and RGB-D camera, and is designed using a “model-based” approach, for which we first extract obstacle information from the camara and scanner and then develop an optimization-based algorithm to find the “best” motion control. The learning-based avoidance strategy is conceptually simple but requires a 3D Lidar sensor, which is expensive. On the other hand, the model-based avoidance strategy requires more insight but can run with very cheap camara and 2D lidar sensors. Both types of algorithms are validated on real quadruped robots in the lab.